I fear aWifeRef remains unwritten. ![]()

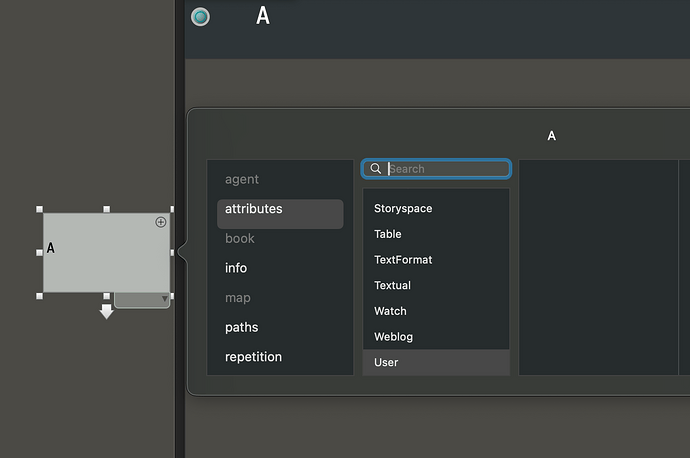

I’ll just show you what happens. I open a new file and Claude should create a note, give it an attribute and assign the value. Maybe I don’t understand how it works yet.

create_note

{ `name`: `A` }

OK: created /A in (null)

set_value

{ `notes`: `/A`, `value`: `5`, `attribute`: `Testzahl1` }

ok changed 1 of 1 notes

I have it checked and Claude says that it does not work via the API and it is now tried via an action.

Do

{ `note`: `/A`, `action`: `$Testzahl1(document)=5` }

Set_value

{ `notes`: `/A`, `value`: `5`, `attribute`: `Testzahl1` }

same result.

Claude recommends that I should enter everything manually

True: Claude cannot currently create user attributes*. But it certainly ought to be able to use any attribute that exists, including user attributes. I’m pretty sure I’ve done this.

Have you defined Testzahl in this document?

- Actually, Claude can create user attributes through a

createAttributeaction! I forgot this. Claude doesn’t know about this, because it’s not in Claude’s readings (/Hints/AI/Claude/Readings/Using Tinderbox). But you can explain it to Claude, or add it to the readings.

Thanks, I will try to explain to Claude. ![]()

10 minutes later. I peeled myself out of bed and tested. I explained to Claude how it works and now it fits. The assignment also works (which I had actually tested before). Great. I’m slowly learning to understand it.

So are we all!

Reset:

I have recreated my collection in a new File. Claude now knows how to create attributes.

But I’m surprised that Claude didn’t create the structure /Hints…. like /Hints/AI/Claude/Readings/Using Tinderbox

Naive as I am, I asked Claude to remember - as planned by the programmer - how Claude has to implement things and what there is to consider.

Claude then created a new structure for me called System Documentation. Among them, Claude created notes, such as:

User Attributes,

Agent Queries,

Data Capture Workflow,

Maintenance & Extension,

Predefined Values,

Displayed Attributes.

After each adjustment or new entries, the AI maintains these notes automatically.

I experience the combination of Claude and Tinderbox every day.

Maintenance & Extension:

Anleitung für Systemwartung und Erweiterungen

NEUE ATTRIBUTE HINZUFÜGEN:

Syntax: createAttribute(“Name”,“Typ”,“Default”,“user”)

Typen: string, number, date, boolean

NEUE AGENTEN ERSTELLEN:

1. Agent-Note erstellen (kind=“agent”)

2. AgentQuery-Attribut setzen mit Query-String

3. Agent sammelt automatisch passende Notizen

WARTUNGSAUFGABEN:

- Regelmäßig Aktueller_Wert aktualisieren

- Bewertungen nach Verkostungen hinzufügen

- Status bei angebrochenen Flaschen ändern

ERWEITERUNGSMÖGLICHKEITEN:

- Neue Länder/Regionen durch Agenten

- Preishistorie durch zusätzliche Attribute

- Verkostungsgruppen durch Kategorien

- Bildverknüpfungen über File-Attribute

BACKUP-EMPFEHLUNG:

Regelmäßige Sicherung der .tbx-Datei

Claude suddenly can’t implement some things anymore. I have the impression that it does not know how to do it, because these /Hints are no longer created by Tinderbox.

- The TBX(s) used in this fashion needs additional content in

/Hints(as inserted by the Tinderbox app).

Found the problem. Disable an then enable “Enable AI Integration in this document” in these settings fixes the problem.

It seems to be a bug in Tinderbox, because even newly created files did not work.

My summary

Claude:

-

makes very useful suggestions for organizational structures. However, these vary in quality and sometimes need to be corrected by clarifying the request

-

invents answers. Example: I had an attribute “open” and “fill level.” However, I had left the fill level blank in my source data. Claude invented some percentage values for each bottle.

-

Does not check his own work unless I ask him to. Example: Claude had applied various code, but the result was not there.

-

Repeatedly forgets instructions. Example: I asked him to organize the notes into a structure, but after a while he put the data in the root. When I pointed out the error, Claude remembered what he was supposed to do.

-

Is very good at finding, filtering, and organizing data from the WWW.

-

Does not always implement things logically correctly. Example: Claude created a prototype pBottles with a series of attributes. I explained that an attribute “dealer” would make sense for the bottles. Claude agreed and changed all the bottles, but left the prototype untouched.

-

Annoying because the chat window is limited in text size and even the Pro Plan is not enough to process larger data sets.

Claude is fun when you supervise it and check the results carefully.

I probably also need to learn how Claude works best.

With Tinderbox, it still takes some experience.

But that’s a great prospect.

Tinderbox disables AI Integration by default. If you were using the backstage releases, you also will need to disable and then reenable AI Integration to switch to Tinderbox 11. I should have made this clearer.

This is an interesting and fundamental problem with LLMs.

Today’s LLMs can process between 20,000 and 100,000 “tokens” (roughly speaking, words) before their performance degrades. After that, they lose coherence.

The Notes container (/Hints/AI/Claude/Notes) is an effort to work with this limitation. By adding notes from session 1, Claude can benefit from those notes in sessions 2 and 3. The notes must be concise; if you note down everything, you won’t have much context space left for any new work.

Claude is wildly overconfident about its ability to use Tinderbox. It’s read a lot of software code, so it assumes that if there’s a feature it knows nothing about, it probably works like it did in some completely different system. A clear, concise explanation can help a lot. The Posters note in readings is one example; a remarkably short explanation generally suffices to explain a fairly tricky Tinderbox feature. (And it’s even tricker for Claude, since Claude cannot see the poster!)

So, ironically and certainly unintentionally, Action code’s syntactic idiosyncrasies weigh against it. [From the department of ‘outcomes we didn’t foresee’.] The ‘Blind men and the Elephant’ also come to mind here in grokking the differing perspectives of AI and human.

Thank you for the patient explanation. I only ever used version 11.

I’ll look at the example closely.

Currently, Claude expands my new collection in Tinderbox with new ideas, but always forgets to delete unnecessary structures, although it had previously analyzed and wanted to replace them.

Claude forgets things in the middle of implementation. This is how Alzheimer’s must feel.

As a result, I learned a lot about Tinderbox this weekend. WIN-WIN

Maybe there are still translation problems. He just found a mistake in an agent. Query and action code were missing. Claude showed the applied command to implement for both. Then explained that everything is perfect now. But Query was still empty. After I manually inserted its code, it worked.

Sorry, I didn’t write down his order sequence.

To be fair, I’ve seen Claude try to use ~=, which might be borrowed from PERL, or might be Julia (?!).

Also, up until v5, Sets (the only list-based Type) allowed the ~ as an add/remove toggle in expressions, so it’s there in older documents.

It’s like a curse. Working with Claude is difficult. One minute I’m talking to a genius, the next to a toddler.

I have implemented a comprehensive Whisky collection in Tinderbox. Claude correctly analyzes this structure at the beginning of the session and tells me the content and purpose of the collection, as if it understands it completely.

But with each subsequent task I give Claude, it becomes clear that it hasn’t remembered any of it.

Example: Claude was supposed to expand the pDestille prototype with geodata attributes. In the /Destille container, Claude was supposed to analyze the existing distilleries and search for the relevant geodata online. This data was then to be entered accordingly.

Claude thought this was a great idea, and helpful results appeared in the chat window.

Unfortunately, Claude did not add the attributes to the pDestille prototype, but replaced them.

The error is easy to correct.

I explained the error to Claude and it understood immediately. It correctly analyzed what was wrong and what needed to be reconstructed.

I was surprised that it checked the existing user attributes and filtered out those that might be relevant. However, by referring back to the chat, it could have recognized which attributes had been set previously. This means that the new assignment is not always identical.

Finally, Claude praised itself. A review of the results was sobering. pDistillery was still incorrectly assigned.

In this case, it was a simple mistake, but on several occasions Claude had also overwritten the contents of all the notes. Without a backup, that’s no laughing matter.

Which also surprises me. At the beginning of the session, I had Claude read the notes under /Hints. The structure of the collection can also be found there.

In the above task, Claude found 117 distills to fill, even though there are fewer than 40 created in the /Distills container.

The error was that Claude had previously created an agent to evaluate the distills. It counted these, even though it should have recognized that they were duplicates and that the distills should be determined from the main container. The information about the created agents was stored in the /Hints.

Claude does great things, but often it’s two steps forward and three steps back.

Bboth excited and frustrated by some of its actions.

Because I have a large selection of single malt Scotch, I would have a sedative on hand if things got really bad. If necessary, a good bourbon could also help.

Now I had another nonsensical result.

Claude was supposed to search for new Japanese distilleries in the existing container /Distilleries and add them.

The first analysis was correct. But Claude deleted the container /Japanese Distilleries and created a new container /true under /Root. It created the new distilleries under this container. However, the previously deleted target container /Japanese Whiskies continued to be used in its instructions. That couldn’t work.

In the distillery notes, the data was created as text, but the filling of the attributes and the assignments of the prototype pDistillery were missing.

I explained Claude’s mistakes to it, and it admitted to having caused complete chaos.

It was able to fix it after several attempts, but had to vary the commands for moving.

I had to manually rework the setting of the prototypes and the assignment of the attributes.

But given that AI is a probabilistic Magic 8-ball, why do we expect it to behave like a human? It can’t, and doesn’t. And there’s the catch.

Currently, AI seems an odd cross of an eager undergraduate (eager but lacking in knowledge, experience and discretion) and someone with early-onset Alzheimer’s (so prone to forget everything once you leave the room). As one we map the former to youth and the latter to age, this confounds our societal and intellectual training as to what is trustworthy. Our intellectual immune system is unprepared for this. Meanwhile, we can’t expect the AI corps to worry about this issue as that doesn’t make money so is commercially irrelevant to them. Ethics don’t make money, and who’s going to look after the shareholders?

So, if we trust AI when—if we bother to reflect—we shouldn’t, then the fault is ours at least in part. We’ve learning to never trust folk in marketing and sales: their goals and ours are not aligned. Add AI designers to that list of untrustworthy players.

This isn’t a luddite take, I’m massively impressed by the almost magical way AI gets some things right. Yet the brain-bending part is trying to figure out what we might expect AI to be able to get right. Working against us is that for most of us this is a ‘free’ resource and so obviously should ‘just’ work or else why offer it.

It’s sort of an intellectual re-cut of Soylent Green†:

AI is made out of people’s knowledge. They’re making our intellectual food out of people. Next thing they’ll be breeding us like cattle for food. You’ve gotta tell them.

Then again, I’m sure the parchment makers felt the same when the paper makers turned up and any idiot could now write stuff down—how would civilisation survive?

I guess that having got used to trusting, broadly, software, we need to reacquire a sense of healthy skepticism when offered free samples. AI is in part faith, and the latter as historically and persistently has not been without its problems.

†. Depressingly, I’m reminded Soylent Green was set in a putative 2022. ![]()

Keep backups! This is especially true if you’re asking Claude to do risky things like delete containers. I probably wouldn’t dare do this myself without a safety net.

Claude was supposed to search for new Japanese distilleries in the existing container /Distilleries and add them.

Here, I think, it would be interesting to know just what you asked Claude to do, and just what it did. Then we could see what went wrong.

As Claude does at the start of a session, I suggest you take a look at Tinderbox usage in Claude’s Readings container. That is what Claude knows about using Tinderbox. Notice that there’s little or nothing about adding attributes; if you’d like Claude to add new attributes, it might help to explain how to do it.

—

I find it helpful to guide Claude in tasks like this through shorter steps. For example, explain what a “Japanese distillery” is, and ask it to find some. If that works, asks it to count all of them. If that works, ask it to make a list in the $Text of a new note. If that works, then (perhaps) move the Japanese distilleries to a new container.

This is also, I think, what you’d do if you had students doing a lab session in Whisky 201. In a chemistry lab (especially) you don’t want first and second-year students doing stuff on the fly, because students don’t know what’s dangerous or pointless. You want them to take small, systematic steps, noting what happens at each step and stopping when something seems to be wrong. This is good practice in any case (hello Michael Faraday) but saves lots of small explosions, toxic material synthesis, and other mishaps that tend to cause wear and tear on the equipment and the instructor.