It’s very simple. The system installed NL-prefixed taggers (except NLTags†)are the only ones to use macOS NLP features to scan $Text for matches the NLP thinks appropriate for that category. The reasoning used is not accessible to the user.

My understanding of the 3 NLP powered taggers are that they are experimental, suggesting things. As NLP infrastructure improves so should the taggers. To see how far from accurrate the process is at present (a limit of the NLP not Tinderbox) here are just some of the ‘organisations’ detected in aTbRef by NLOrganizations:

Cmd

Coding And Queries

Collapse All Containers

Columns

Commence Dictation

Continental

CovidNearMe.org

Cow

Creative Commons

Cross

Data

Days

Defines

Delicious Monster

Delta

Development Peekhole

Dictionary

Dictionary.keys

Displayed Attributes value

So, not something I’d rely on.

By comparison in a user tagger, the Tagger file syntax absolutely defines what is/is not detected as a match.

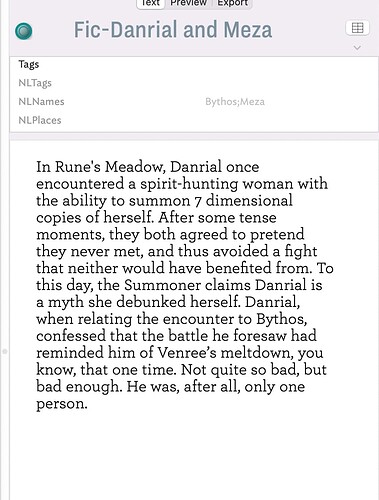

The process only looks at $Text. $NLNames should only contain the value ‘Meza’ for notes where that string occurs in test. As documented, if the that string is removed from such a note, it should also be removed from the note’s $NLNames. If that isn’t occurring I suspect tech support would like to know as it would indicate some gremlin had crept in; I sat tech support as likely they will need to see the whole TBX.

Yes. Tinderbox’s Help is unclear as to what is searched and the run of its text implies only $Text is looked at. In fact, changes to $Text are used as a trigger to re-scan the note. Trawling though release notes‡, buried in those for b502 I find this:

Taggers now operate on both the note name and its text.

Taggers originally ran on $Text alone and the above change never filtered through to the Help docs§. I assume that changes to either $Text or $Name now trigger tagger re-processing of the note.

Further notes:

- NLP tagging is essentially experimental, especially the 3 taggers using Apple NLP.

- It’s not at all clear, but my understanding was that users are not expected to add content to the 3 NLP-using taggers’ notes in hints. I may well be misinformed, but it might also explain why some users are getting unexpected results.

- Re $NLTags. I believe the original idea was that it would look for a (never documented) set of things and tag them. One such possibility, would be detecting a possible planning event and adding the $NLTags value “plan”. If that was tried, I think it no longer works and that as at v9.5.2, NLTags can be thought of as a predefined empty user tagger (i.e. the ‘NL’ prefix is slightly misleading). IOW, it does nothing unless you add some syntax as you might for a user-defined tagger. Of course as NLP improves, this might change.

- NLP related taggers are experimental. Unexpected outcomes should occur. If they do, they are better reported direct to tinderbox@eastgate.com rather thn here as (a) users can’t see this in-app process and (b) users don’t know what the correct outcome. It’s the problem of AI: it can give you an answer but it struggles to tell you why/how it came to that conclusion!

- Outside the 3 NLP-using taggers, tag definition is regex based. Enlightened self-interest would suggest users don’t use really complex regex in tagger definitions. The ought not to ever be such a need, we we do love experimenting, so i think the caution worth noting.

†. Why? See here.

‡. These do, in re-sumarised form get added to Tinderbox Help per release (though the change to taggers is not recorded AFAICT). The Backstage program and beta testers have access to the source TBX recording actual RNs and which is usually a bit behind the current beta and so has much additional/more recent info than Help. I used the latter to find the above.

§. As a result aTbRef currently has this wrong. I’m seeking clarification (different channel) on a number of ambiguities re taggers and will update the pages when I know. But it is the case that both $Text and $Name are scanned by taggers. I’ve already make a temporary change to the main tagger article (see new heading “Scope of analysis”) on this pending a proper review of the tagger articles.