With the help from Tom we figured out that the issues with the last version are related to a beta version of Tinderbox. Everything runs fine with the current public version of Tinderbox, but not with the Beta.

Pulling in @eastgate

I sent MarkB an email, reporting the problem

My guess is that something might have changed with the interpreter in the beta

Just a hunch

Tom

Here is a new demo with the focus on keyword generation and automated linking using OpenAI.

You should be able to use the code found in the demo without any modification to add this functionality to you own Tinderbox files.

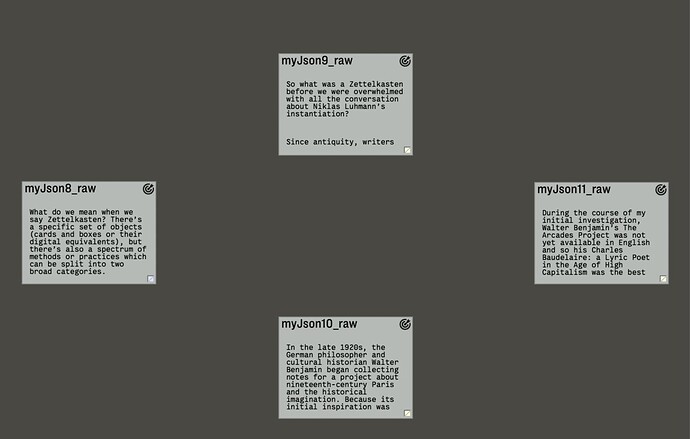

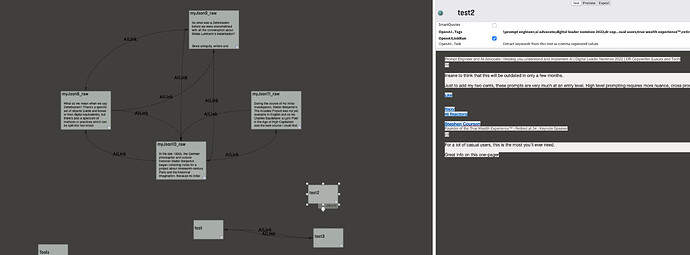

So for the demo there are four notes - this is the structure before I switched on the OpenAI engine:

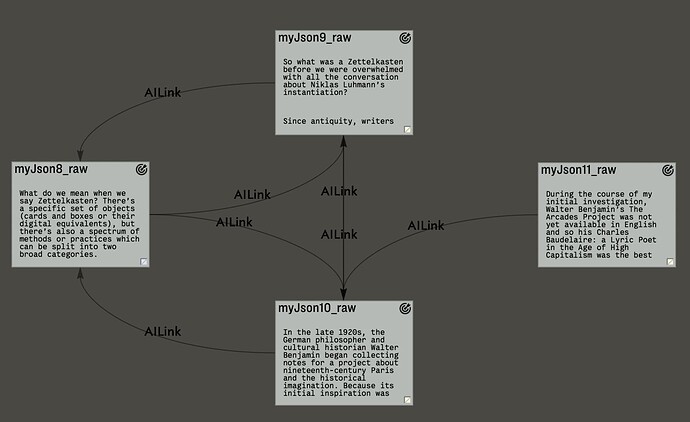

and this is what I got after the OpenAI engine processed the notes:

So if you think about what could be done with Tinderbox and OpenAI automatically and compare it to the great demo of “The brain” shown by Jerry Michalski after many, many years of manually linking the notes… this is incredible.

Have fun!

OpenAI-Demo_Keywords.tbx (227.3 KB)

Pretty slick Detlef!

I like the new edict approach. 1 question

Can you give me an example how we (non-programmer) can easily adapt the code: Instead of keywords:

Ex:

‘Let’s say I want chat gpt to get me the main opposing viewpoint to my note.

Is there a way to adapt the code function to capture the new task, understanding’

Tom

Hi Tom,

that should (!) be an easy task:

- copy the cOpenAI note to your TBX file

- copy the gAIPreferences note to your TBX file and fill out the OpenAI Key attribute

If you would like to use the keyword approach - also copy the gAIStopWords note to your file and add the function runOpenAIKeywordExtractor_db(); (just copy this line of code) to the Edict of a prototype you use for your notes. The prototype has to have at least the attributes OpenAI_Task (always) and OpenAILinkRun (only for keyword extraction). That’s it.

If you like to use an other task then extracting keywords you only have to call:

myString = callOpenAI_db(theTextToWorkWith,2);

The 2nd parameter has to be a 2 (the 1 is reserved for keyword extraction). Your write the task “return the main opposing viewpoint” into the OpenAI_Task attribute of each note (add it as default value to the prototype) and you are ready to go.

Have fun!

and here a new version where I replace $Path with alternatives - nothing else has been modified.

OpenAI-Demo_Keywords.tbx (231.9 KB)

QQ: where do you place myString call? In the note attribute?

Tom

Hi Tom,

it depends where you want the result of the call to OpenAI to be added.

For example you could put this line into a Stamp:

var:string theResult = callOpenAI_db($Text,2);

Now you need to think about the place to store the result. For example you could create a 2nd note and put the result into the $Text of this note. First you retrieve the $Name of the current note and add something like “_AI_viewpoint” to the name, then your create a new note and then you put the result of the OpenAI call into the $Text of the new note. The complete code for your stamp:

var:string theResult = callOpenAI_db($Text,2);

var:string newNoteName = $Name + "_AI_viewpoint";

var:string newNotePath = create(newNoteName);

$Text(newNotePath) = theResult;

genius… thanks Detlef

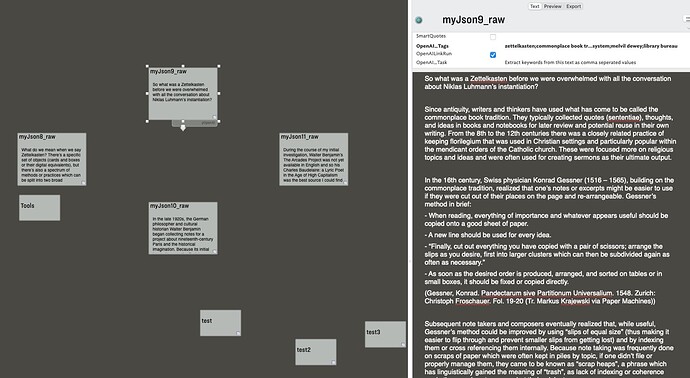

One last thing, I am getting the tags auto populated but unfortunately none of the links to the tags seem to be working. I realize it might take a bit of time and I have allowed for that. Here is a screenshot.

Is there a tweak I missed? the OpenAILinkRun is true on all notes.

Is anyone else having this issue?

Thnaks

Tom

if you set OpenAILinkRun to false the links will get recreated and this should be fast. Only if OpenAI_Tags is empty the function will call OpenAI to create new tags (and this takes some time).

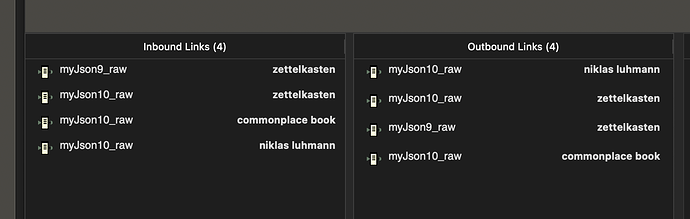

For example the note “myJson10_raw” should have:

Are you using the beta of TBX?

I am using the production version v606

Thanks. Now I am getting an AILink between notes, not the keywords, as shown here:

That’s how it should look. I named the links “AILink” because that gave me the opportunity to select those links and delete them later. Important for the development. I would like to have a link type and a link name. So I would be able to select all links by the type “AILink” and set the name to the keyword used - but I think TBX has only one option, the “linkTypeStr”.

Ahh

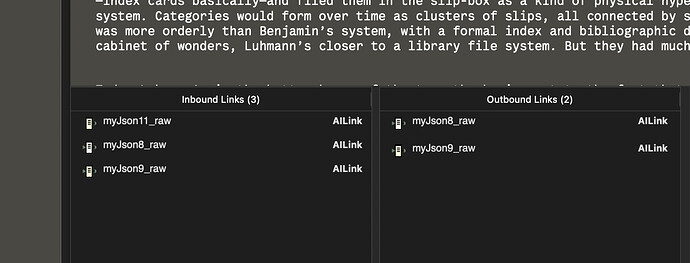

This function then looks up the keywords in all other notes and adds a link to this note. The same happens the other way around. I use the OpenAILinkRun attribute for this. Only if OpenAILinkRun is false, the links for this note will be refreshed. So Tinderbox will only change something if it needs to be changed. I don’t want to run a refresh if there is no need to - otherwise, a large file will demand a large amount of processing power.

question: what do the links represent between notes? I assume common keywords…correct? If so, what is the threshold? how many keywords must a note have in common.

Thanks

tom

it is a one keyword = one link relation. Since TBX will not add more then one link between two notes (one for each direction to be precise): one, two or more keywords make no difference if at least one has been found in both notes

Each link can have only one link type (or none). If A needs to link to B by 2 (or more) discrete link types, make an additional link. That is how things stand as at v9.5.2 (and I’m not hinting at a forthcoming change).

Adding extra links isn’t an issue for Tinderbox, but might look messy in views that draw links. More precisely in Map and Timeline, but not in hyperbolic view. The latter is because only one line is drawn between any pair of notes, regardless of the number of links.

Remember too that for any link type, the visibility of that link type can be toggled on/off in the Inspector. Shortly you will be able to also set that visibility state in action code but for now, in v9.5.2, you have to use the Inspector.

FWIW, from this you will see that whilst in Browse Links you can alter a link’s link type, in Action code you do this by deleting the existing link (with the wrong type) and adding a new one (with the right type). Tip: when doing this, a good plan is to add the new link before deleting the old as this ensures the two notes remained linked throughout (i.e. you don’t lose sight of which two notes are in play).

Mark - thanks a lot for the explanations! As I wrote before: I need to set the link type to get a handle for removing those links while testing my code.

In my link the relevant code is located in the function processOpenAI_Keywords_to_Links_db.

If you change this line:

createLink(relatedNote,theNote,"AILink");

to

createLink(relatedNote,theNote,curKeyword);

Each link will get the keyword as type. But this will give you many link types and I don’t know if this is a good idea.

I can’t claim to know the answer. Tinderbox won’t mind the extra links. But your map views might look a bit messy. But imminent changes to Hyperbolic view (HV)will make such a scenario easier. Yes, in a map you control where a note goes on the screen whereas in HV the apps does the layout. But, I think HV is the one to use use going forward if you are using, deliberately a lot of link types.

Another aspect is whether the pertinent info is (only) in the link title or in the note(s) being linked. IOW, is you aim to see text on view or will you interrogate the TBX info another way.

Murphy’s Law dictates our intuited choice won’t be the easiest method (or possible) in Tinderbox. However, Tinderbox is very flexible and the answer is to pivot the task. What is important to see (as text on screen) and those where to store it.

HTH ![]()

For those playing with my OpenAI demo - here is a new version with some small and one major improvement.

First of all there are now two notes gAIStopWords and gAIReplaceWords . They are used to clean the returned keyword list. All terms in the first list will get removed and all terms in gAIReplaceWords will get replace (original:replaced;).

The important change is the support for the GPT-3.5-Turbo model. Until now I used the text-davinci-003 model. The ugly thing is that it is not only the name of the model that changes but the format of the cURL parameter too. Also the returned JSON data has a different format. Don’t know why they are changing those basics but we have to deal with it.

The gpt-3.5-turbo format:

{

"id": "chatcmpl-7TmrxSml8dtdFEW0xrUzA6Yf88wyj",

"object": "chat.completion",

"created": 1687333721,

"model": "gpt-3.5-turbo-0301",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Zettelkasten, workflow, learning, producing, knowledge, texts, categories, knowledge management, writing, reading, acquisition, organization, collecting, processing, paper, computer, notes, Mac, mental gears, switching, focus, passage, margin."

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 317,

"completion_tokens": 52,

"total_tokens": 369

}

}

and the former text-davinci-003 format.

{

"id": "cmpl-7TmxYrGV9M29klQmF6kQ5ud5SBMzb",

"object": "text_completion",

"created": 1687334068,

"model": "text-davinci-003",

"choices": [

{

"text": "\n\nZettelkasten, Knowledge Management, Writing, Reading, Collecting, Processing, Notes, Mac, Focus, Mental Gears",

"index": 0,

"logprobs": null,

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 338,

"completion_tokens": 28,

"total_tokens": 366

}

}

This is just for the records - I adjusted the functions accordingly ![]()

Now you can enter text-davinci-003 or gpt-3.5-turbo as model in the gAIPreferences note. Everything else works like before. If you implement the OpenAI function into your own TBX file:

- copy the cOpenAI note into your Library

- copy the gAIPreferences, gAIStopWords and gAIReplaceWords to the top level of your file

- add runOpenAIKeywordExtractor_db(); to the $Edict of the prototype you use for the notes to be processed by the OpenAI engine

- take a look into the ReadMe! note of my demo

The two models do return different result - you have to play with them to see where you get the better performance for your prompt. For example using both models on the same note in my demo I got:

davinci

arcades project;bibliographic data;boredom;cabinet of wonders;coding system;common thematic thread;commonplace book;convolute;cultural historian;fashion;formal index;gambling;german philosopher;german sociologist;gnomic aphorisms;how to take smart notes;indie software;interlinked fragments;iron construction;library file system;niklas luhmann;old tour guides;panoramas;passagenwerk;physical hypertext;prostitution;quote;slip-box;sociological essays;surrealist poem;sönke ahrens;thematic cluster;walter benjamin;zettelkasten

gpt-3.5-turbo with the prompt “Extract keywords from this text as comma seperated values”

32 colored shapes;70 books;actual arguments;arcades;bestselling book;bibliographic data;boredom;cabinet of wonders;career;categories;central intellectual focus;coding system;commonplace book;convolutes;cottage industry;cultural historian;died;dissertation;early 90s;english translation;entire collection;fashion;formal index;gambling;german;gnomic aphorisms;grad school;harvard university press;historical imagination;how to take smart notes;ill-fated attempt;indie software;initial inspiration;inspire;intellectual labyrinth;interactive code;interlinked fragments;iron construction;late 1920s;library file system;linear book;literary theory types;managing;mysterious;mythical white whale;niklas luhmann;nineteenth-century paris;note;occupied france;panoramas;paris arcades;parisian passages;passagenwerk;philosopher;physical hypertext;private codes;private system;productive life;project;prostitution;publishing;quotations;reading notes;research;retracing;scholar;slip-box;sociologist;spain;strange enchantment;structure;suicide;surrealist poem;sönke ahrens;technique;thematic connections;thousand pages long;tragically;two decades after;walter benjamin;zettelkasten;zettelskasten method

gpt-3.5-turbo with the propmpt “Extract main keywords from this text as comma seperated values”

32 colored shapes;70 books;arcades project;astonishingly productive life;bestselling book;bibliographic data;boredom;cabinet of wonders;categories;central role;coding system;commonplace book;convolutes;cultural historian;enchantment;english translation;fashion;formal index;gambling;german philosopher;grad school;historical imagination;how to take smart notes;indie software;intellectual labyrinth;interlinked fragments;iron construction;late 1920s;library file system;linear book;linked;literary theory types;modern shopping malls;mysterious arguments;mythical white whale;niklas luhmann;nineteenth-century paris;note;occupied france;panoramas;paris arcades;parisian passages;physical hypertext;private codes;private system;prostitution;publishing;quotations;research and reading notes;scholar;slip-box;spain;suicide;surrealist poem;sönke ahrens;technique;thematic connections;tragic death;walter benjamin;weeks retracing benjamin’s steps;writing dissertation;zettelkasten;zettelskasten method

The GPT-3.5-Turbo API refers to the same API model that powers ChatGPT. GPT-3.5-Turbo is significantly less expensive than OpenAI’s existing GPT-3.5 models. In fact, it is ten times cheaper and has been shown to outperform Davinci-003 on most tasks at a fraction of the cost.

Have fun!

OpenAI-Demo_Keywords.tbx (256.3 KB)